The panel began with summaries of key findings from each of the earlier presentations:

Mike Follet: Research project that tries to understand visual attention in cinema vs other media.

Marc Guldimann: AU metric proving connection between AU and outcomes.

Elise Temple: Facial coding is not the best measure to demonstrate emotional engagement.

Bill Harvey: Analysis of 5 brands across digital environments showing stream got most viewability and engagement; feed got the most positive clicks (engagements); all got same level of recognition, persuasion, and ad liking.

Duane Varan: EEG and Heart rate are superior to measure attention. Utility of using fitness tracking data.

Johanna Welch: Predictive proxies for sales and the role that attention plays in that. Built a model that proved improved assets to drive double digit sales lift.

Pedro Almeida: MindProber is a tool that collects physiological responses. In the research they show how NBC Universal uses this tool to show value of their IP, and how metrics are valid signal of quality of context and has predictive power.

Karen Nelson-Field: What we saw across all presentations is that there is an agreement that attention is valuable; it is different from viewability. There is a gap in applied technology. All agree that the definition is the same—human paying attention, stopping what they’re doing, filtering out other distractors, whereas vendors differ in the operationalization of the definition.

After these statements, the panelists responded to and discussed the following questions from the audience:

- How are you thinking about different environments where we conduct this work? Outside labs, outside MRIs. This is one of the most complicated parts of designing attention metrics. Logistics we need to deal with.

Pedro: There are challenges of collecting signals out of lab. The signal is noisier. To counter this, we built platform from outside. We also took into consideration movement artifacts—are people moving or not. We can understand types of movement artifacts. Finally, also took into consideration sample size to average out what is not synchronized with content.

Elise: We know context matters and that’s why creative matters. Knowing when to test for which is important. Isolating the creative could be the best way to understand creative because context has so much noise.

Duane: Attention is the new pink. We should at the same time have a talk about distraction—distraction is much bigger issue today than in the past. People being on their mobile phones—multi-screen experience. There are ways we can tackle that, but this needs to be another conversation. How can we systematically understand types of distraction and which remedies could be applied. One of the most exciting things is providing remedies.

Bill: Depends on the use case. Neurological and biometric measures are more sensitive and can get bruised in the wild, whereas others like eye tracking may not be technically as good but robust in the wild environment.

Mike: Not less ambitious. That data doesn’t have to be done in the lab. Collect tons and tons of data but also to understand that attention is a process. It is what happens afterwards as well. Additional way to think about this—take data, build predictive models of attention and apply models to live datasets to do live experiments and link to outcomes. This is validation of attention, but in a sense, it is the attention data. There is a multimodality here. Collect data on large scale, apply to live campaigns and learn something as a result.

Marc: When taking something from lab to the wild is to understand how things work differently when measuring vs. optimizing. Duration of attention is correlated with memory. When you take this simplistic measure of attention time or duration in the wild you see that a metric that is good as analysis fails when you start to optimize it. Be careful about the incentives that we are creating and the fixation about duration of attention. As an example, old people pay twice as much time as younger people. This is a risk problem. You do not want to judge the quality of media based on how long people pay attention to ad. The earlier that brands appear in ads the less attention people pay. So be careful about incentives of metrics that we are creating.

Karen: This is about outcomes. Neuro side—they think computer vision is simplistic and biased. There is no understanding about cognitive processing. But they’re actually wrong because we access the nature of a third dimension which is how attention shifts—ten different types of shifts, concentration, inattention and so on—and explore how this is all connected to processing, to action and outcome. How does inattention play a role and what is the interplay between all the different types of attention and outcome.

Elise: Attention is super complicated. “Attention is not always good” is too simplistic. We never look at it by itself. Attention evolved to shift focus for all sort of reasons. It is an ambivalent agnostic measure so you need to tie it with other things.

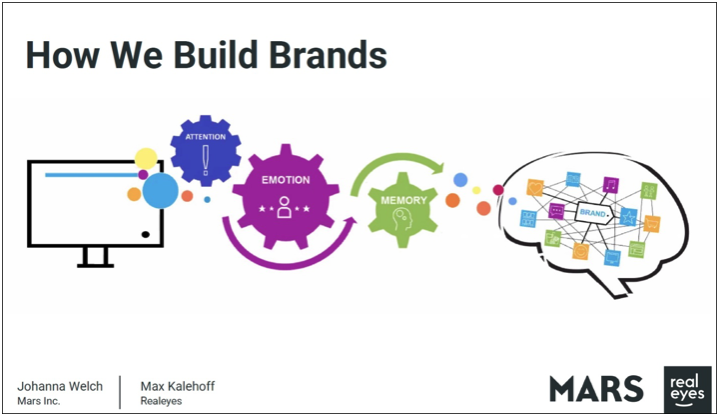

Johanna: The connection with sales. Benchmark for is it working or not; is it a right size for what we need it to do. It’s not one size fits all. Often when partnering with a vendor we pair the data with additional things. To get the best sales outcomes we need to marry data: We can’t talk about attention without emotion, where the brand is, memory, fit for platform and so on. From an advertiser’s point of view, we start taking different data into models and correlate this to sales. Be open to different approaches and look at outcomes.

- EEG and facial coding. I love both. Analyze same ads and saw similar insights—bio feedback. Regardless of method, they all point to similar directions. Question to Joanna—migrated from absolute neuro-waste measures to facial coding. What is your take on it in terms of the technologies?

Joanna: Both sit in our tool kit. As a company we spent years investigating these different measures. Digital changed circumstances so we had to pivot to something that was close enough—for deep high risk campaigns we recommend using neuro; for turn-key digital we can lean on facial coding data married with other measures. So, this depends on how deep you need to go into diagnostics vs. more of a gut check. Its right size for what we need to do as a business.

- Disagree about attention is defined or not. This is not about attention. It’s about quality of impression and content—how we value this. It’s not a question of replacing viewability; rather how do we come together as an industry and operationalize and build into supply chain so that every advertiser knows what to do with it and doesn’t need a data-science team to figure out what to do with it.

Duane: Kudos to the ARF. Attention is complex. You need to have people with appropriate expertise to evaluate it. What came out of “Neuro Standards 1”—people knew what to ask and how to engage with the conversation in a way that was different than before. The ARF is filling a critical gap because we need validation. To understand what these measures mean.

Karen: There are two sets of vendors here: deep and gut check—more academic than gut check but still. I understand diagnostics. I understand what the brain does but also agree that for those with a media background we need to fix the “all reach is not equal” issue. Yes, it is complex, but at the end of the day we need to help advertisers when they aren’t getting what they think they’re getting when they pay for what they pay. This is critical and has a critical effect on business models and concepts.

Marc: Free market approach. Let a thousand flowers bloom and look at the outcomes. This is all that matters. Nuances of how to capture attention—maybe this matters for creative, but for media come up with a metric and see how it plays out.

Mike: You’re absolutely right. Attention is essential to human existence. No signal metric will capture exactly. Instead of trying to wait until we have an elusive perfect definition, we should adopt a pragmatist approach and see what works.

Duane: I disagree. This is Audience X Science. For science—if we are going to call this attention, let’s make sure it is attention and not something else.

Bill: Let’s not call it attention but rather impression quality. If we’re trying to predict sales, let’s find what best predicts sale.