Measuring the impact of in-market investments can be like finding the proverbial needle in a haystack. Combining the granularity of panel-data and the heft of Big Data can make finding that needle a lot easier. Oracle Data Cloud shows how they bring the two data sources together.

Member Only Access

The ARF has announced two new efforts to tackle data quality questions:

Data Labeling Initiative - with the ANA’s Data Marketing & Analytics division, the IAB Tech Lab, and the Coalition for Innovative Media Measurement (CIMM).

Data Validation Initiative - independently conducting research to determine whether surveys can be used to measure the accuracy of those targets.

Academic advertising researchers argue that multiple measures are needed for accuracy. Practitioners argue that a single measure of attitudes is sufficient. Who’s right? This article suggests it is better to have one valid measurement item that fully captures the semantic meaning of the construct rather than having multiple bad ones, no matter how internally consistent the measurement scale may be.

Member Only Access

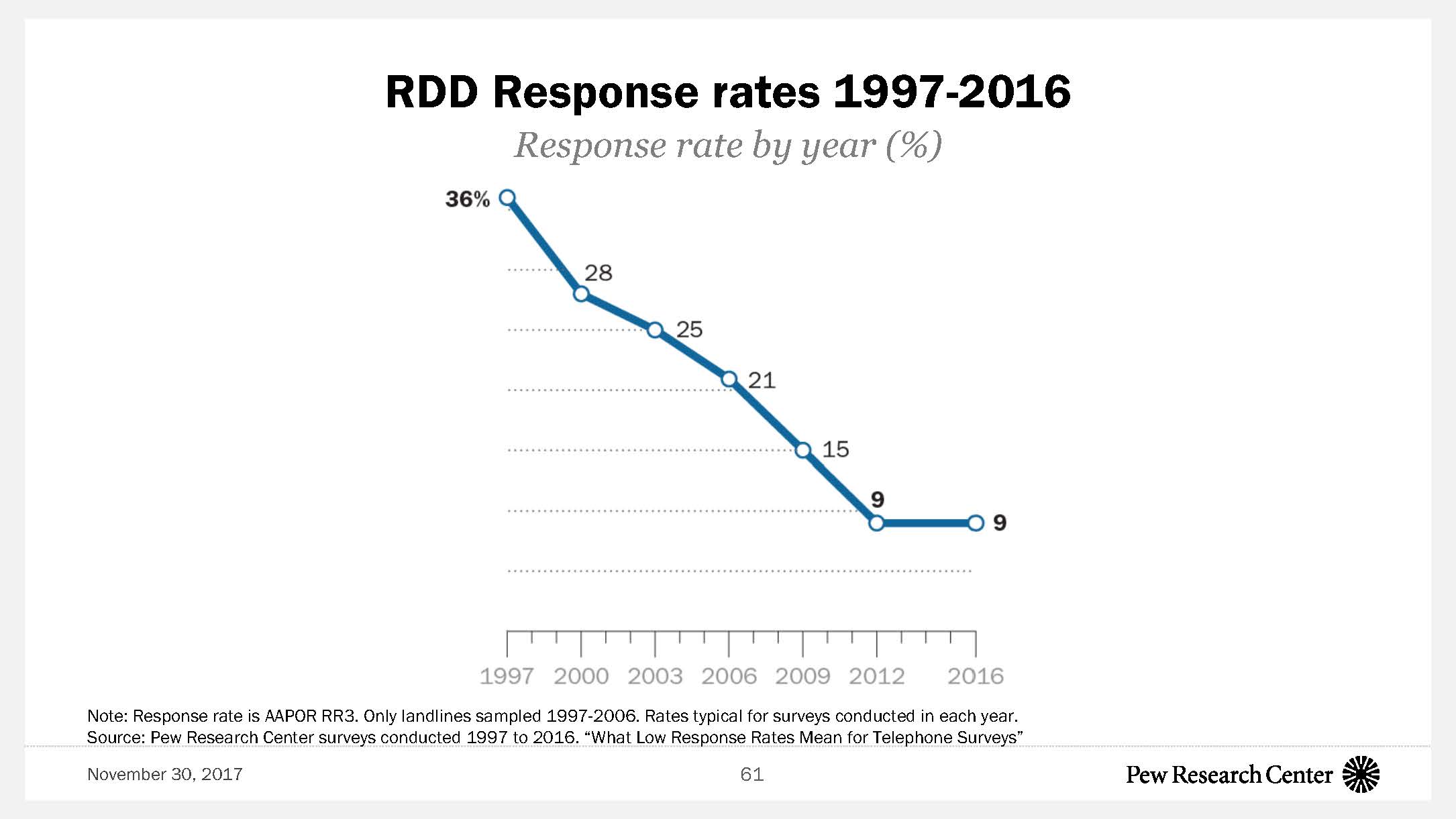

The continuing rise in non-response rates has led to growing concern about the representativeness of surveys. Andrew Mercer, Senior Research Methodologist at the Pew Research Center, provides an overview of the issue along with best practices in modeling representativeness, for example, think through your modeling assumptions before collecting data.